How to use Precision@K to evaluate your recommendation algorithm: End-to-End ML (Part 2/3)

Recommendation algorithms are everywhere. Every time you fire up Netflix, scroll through Spotify, binge on YouTube, or get lost in TikTok, there’s a Machine Learning (ML) algorithm hard at work, nudging you toward that next movie, song, video, or — let’s be real — something else from Amazon you probably don’t need. (If you feel personally attacked, don’t worry — I’m right there with you.)

The reality is that a significant portion of what we consume daily is the result of some highly sophisticated recommendation system. These algorithms aren’t just trying to guess your taste — they’re strategically designed to keep you glued to the screen. Heck, even this article might have found its way to you because of one of those algorithms (thanks, by the way!).

But here’s the kicker: despite their massive role in shaping our digital lives, how much do we actually understand about recommendation algorithms? Not enough, I bet. No worries, though! In the next five minutes, you’ll walk away knowing more than you did before about the magical (and sometimes mischievous) systems pulling the strings behind the content you see.

“ You aren’t necessarily aware that when you tell me what music you listen to or what TV shows you watch, you are telling me some of your deepest and most personal attributes” — Cambridge Analytica’s whistleblower, Christopher Willie.

A recommendation algorithm is a machine learning-based system that uses user data to predict what items (products, movies, articles, etc.) a user might like based on their past interactions. That said, these past interactions, can be mainly broken down to two types: explicit feedback and implicit feedback. When you rate a product on Amazon or give a thumbs up/down to a video, that falls under explicit feedback since this feedback was explicitly given by a user. On the other side, the data that gets stored every time you click on a product, watch a movie, or listen to a song, is called implicit feedback. The latter type is less clean compared to the former, but when you compare the sheer amount of implicit data (large sample size -> fewer errors), it is more useful than not in most cases.

Content-Based Filtering vs Collaborative Filtering

Content-Based Filtering

This method relies on the metadata of the users or the product itself to recommend other products with similar data. As an example, you might be recommended the movie Gifted (an emotional drama of an uncle raising his niece) after watching Captain America (well, we all know Captain America) — seems a bit out of place to me! The common denominator in the two movies? Chris Evans. The algorithm thought you will enjoy Gifted since you enjoyed Captain America played by Chris Evans — who also is the main character in Gifted. This is a classic case of content-based filtering using the case of members of a movie. Similarly, you can use a movie’s release year or genre/s as well.

Cold-start Problem

As you can probably tell by now, recommendation algorithms depend on your past experiences to recommend new content. But when a new user joins the platform, they have neither explicit feedback nor implicit. This is a scenario well known as the “Cold-start Problem” in the ML community. The above approach is well utilized to tackle this problem. If you use any major streaming service, you probably remember answering questions about which genres of movies/songs you like the most during the sign-up process. And the questions that are asked during the sign-up gather enough data to make some recommendations for a user to get started with the platform. However, this does not take into any user-interactions and solely relies on metadata. That’s where collaborative filtering comes in.

Collaborative Filtering

Collaborative filtering recommends items to users based on how other users with similar preferences (explicit feedback) or behaviors (implicit feedback) have interacted with those items.

Collaborative Filtering can be broken down into two sub-types:

User-Based Collaborative Filtering

- Core Idea: Recommends items to a user based on what similar users have liked.

- How It Works: It identifies users who are similar to the target user (based on past behavior, such as ratings or interactions). Then, it recommends items that these similar users liked but the target user hasn’t interacted with yet.

- Example: If User A and User B both like Movie X and Movie Y, and User B also liked Movie Z, the system might recommend Movie Z to User A, assuming they have similar tastes.

Item-Based Collaborative Filtering

- Core Idea: Recommends items that are similar to the items a user has already liked.

- How It Works: Instead of focusing on users, it looks at the similarity between items based on how other users have rated or interacted with them. The system then recommends items that are similar to those the user has enjoyed in the past.

- Example: If a user likes Movie X, the system looks at users who also liked Movie X and what other movies they liked. If many users who liked Movie X also liked Movie Z, Movie Z will be recommended to the target user.

How can you make the recommendation algorithms work for you instead of the other way around?

Man, these recommendation algorithms — always serving up what they think you want. But how do you make them work for you? Simple: train them! The more you interact (rate, like, skip), the more they learn. Want more indie films or niche content? Start engaging with it and watch the algorithm shift.

Don’t let them get too comfortable, though — mix it up! Manually explore new content to keep them guessing. And remember: use different platforms for different types of content. Let Spotify know your music taste, but rely on YouTube for tutorials. In no time, you’ll be shaping your recommendations instead of letting them shape you.

In our previous article, we talked about the significance of EDA in a machine learning project. Now that we are done with that, it is time to get to every data scientist’s favorite part in an ML project, the model building part.

Data Transformation

However, before we get started on building the model, we need to organize the data in a way that we can fit the model. First thing we do is to filter any movies that don’t have a substantial amount of ratings under them. This step is crucial to reduce noise and improve our model accuracy. Recommendations based on movies with limited ratings are more likely to be inaccurate, as the system has less information to predict user preferences. So we decided that we can get you on board if you have more than 10 ratings. That reduced the number of movies from 9724 to 2121. Now we need to transform this dataset from a pandas dataframe to a sparse matrix.

What is a Sparse Matrix, and why do we need one?

As you can imagine, a user from our dataset can only watch and review so many movies. So, when we first transform our dataframe to a pivot table, we end up with a lot of zeroes. These zeroes represent movies that a user didn’t rate.

Come Sparse Matrix to the rescue! Sparse matrix converts the above pivot table to a matrix that only keeps a record of non-zero values — which is a significantly lower number compared to the number of zeros. That holds true across all user rating datasets since there will ALWAYS be more movies that users haven’t watched in the dataset than they have. Therefore, to work with data sets with a large number of zeros, a sparse matrix is your best friend who will only remember things that matter.

In addition to memory efficiency, it also increases the speed of the model, as it now only needs to account for non-zero values. It also helps as you scale your product from a few hundred users (our dataset) to millions of users (Netflix) as you still only store data that has a value. Overall, sparse matrices make algorithms more memory efficient, faster, and scalable.

Model Building

Finally, we are at the model building stage of this project — yay!. We are using a k-NN (K-Nearest-Neighbours) model to find similar movies to each other by calculating the distance between each movie. In this case, similar movies are your “nearest neighbours”. The k-NN model computes the distance between two movies using the ratings they have received from the users. However, only the users who have rated both movies contribute to the distance calculation. Ratings from users who have only rated one movie (or neither) are ignored.

Example matrix of ten movies rated by 10 users:

Analyzing Similarity Between Movies

Example Scenario: Finding Similar Movies to “Inception” (Movie A)

- Rated by Users: Users 1, 2, 5, 6, 9, and 10 rated “Inception.”

- The k-NN algorithm will compare the ratings of these users with other movies rated by the same users. For example:

- Movie B (The Matrix) is rated by User 6, but there’s no overlap with other users who rated “Inception.”

- Movie C (The Godfather) is rated by Users 1, 5, and 9, so these ratings can be compared with their ratings for “Inception.”

- Movie E (The Dark Knight) is rated by Users 1, 4, 5, 9, and 10, which overlaps with “Inception,” so the model can calculate the similarity between these two based on those users.

How K-NN Determines Similarity:

- For each movie, the model calculates a distance (e.g., Manhattan distance) based on the ratings that overlap between two movies. The movies with the smallest distance are considered the most similar.

- For instance, “Inception” and “The Dark Knight” have several common users (Users 1, 5, 9, and 10), so the algorithm would likely consider them relatively similar based on the close proximity of ratings from those users.

Model Testing using Precision@K

Now that we have created our model, it is time to put it to test. Testing the performance of a recommendation is not as straightforward as it for a regression or a classification, even though the fundamentals remain the same.

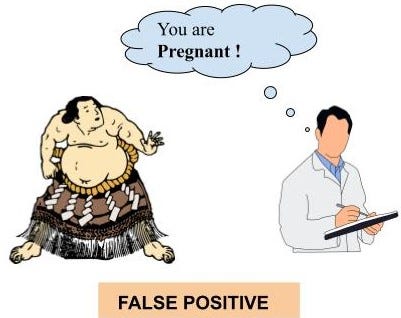

Precision@K enters the chat. To put it in simple terms, Precision@K looks at the movies that were recommended to you (let’s say ten) and checks how many of those movies were “good recommendation”? If four of the ten movies were considered as “good recommendations” by the user, Precision@K is 50%.

Content platforms can rely on various forms of user interaction (clicks, skips, and likes) to conclude whether a recommendation was good or bad. But how do we conclude whether a movie is a good recommendation or not in our dataset? This is where I had to become a bit creative. One way we could check for the quality of a recommendation is through the genres. Imagine a scenario where I entered a movie being classified as adventure, comedy, crime, and romance. I conclude that a “good recommendation” to this movie would at least have 50% or more of the input movie’s genres. Therefore, if the recommended movie is comedy, crime, and a thriller, it has 50% of its genres similar to the input movie — which will then conclude that the recommended movie is indeed relevant.

Hyperparameter Tuning

Now that we know how to check the performance of our k-NN model, it’s time to hyperparamter tune it to find the best parameter. Below we combine all the steps we showed above, but run it through multiple different combinations of parameters using for loops. “manhattan” for metric and “ball_tree” for algorithm seems to be the the highest performing combination which gives us a 0.3051 as Precision@k where k is 10. This suggests that on average, 3 out of the 10 movies recommended are “good recommendation”s as we defined above.

We also wanted to look at model performance across eras. We hinted in the first part of the series that eras that had a lower number of ratings will most probably end up giving wonky recommendations. Our prophecy has come true. As expected, movies that were released before 1960 had the lowest Precision@k (i.e: bad recommendations).

As the last task in this part of the project, we must create a function where the input of that function results in a dictionary of the 10 recommended movies, along with the input movie itself. The resulting dictionary will contain movie names as keys and distances as values in the ascending order. This function will be used in our Flask server in the next part of this series.

With that, we wrap up part two of this series. We’ve explored the key differences between content-based and collaborative filtering, tackled the challenge of sparse data, and learned how to effectively assess recommendation systems. These are the foundational steps that most data scientists focus on, and they’re important, no doubt.

But here’s the thing — most stop here, comfortable with the flashy models they’ve built in their notebooks. The truth is, the real impact comes when you go beyond the notebook. In the final part of this series, we’ll take the next step together, transforming those models into something more useful. Stay tuned to discover how you can elevate your work and become more than just a “notebook data scientist”.